No Touch, No Voice – Just a few hand movements are enough to do shopping

Introduction:

Even before languages are invented to communicate among human, signs are the only way of communication. Man used certain gestures to convey a specific intent to other person. We evolved a lot. Human had found speaking languages, then programming languages , then voice chats etc.. However still signal are easiest way to communicate with other human or system. In this blog, we shall see how gestures can be converted as a new eCommerce channel.

Gesture recognition:

Gesture recognition is the mathematical interpretation made by a computing device from human activity carried out essentially by hands or body.Gestures pave way to issue an input command in more natural way than an app or browser. Easy and convenience are the key advantage of Gesture Technology. Gesture Recognition helps in improving human and machine interaction in more human friendly way.

Objective:

In this blog, we shall make simple hand gesture- diagonal dragging down (by measuring direction of movement) by which make the mute button active/inactive.

Required items:

Hardeware:

USB camera (any webcam will do)

low end computer (or Raspberry pi)

Software:

Python (preferably anaconda environment)

imutils, pyautogui

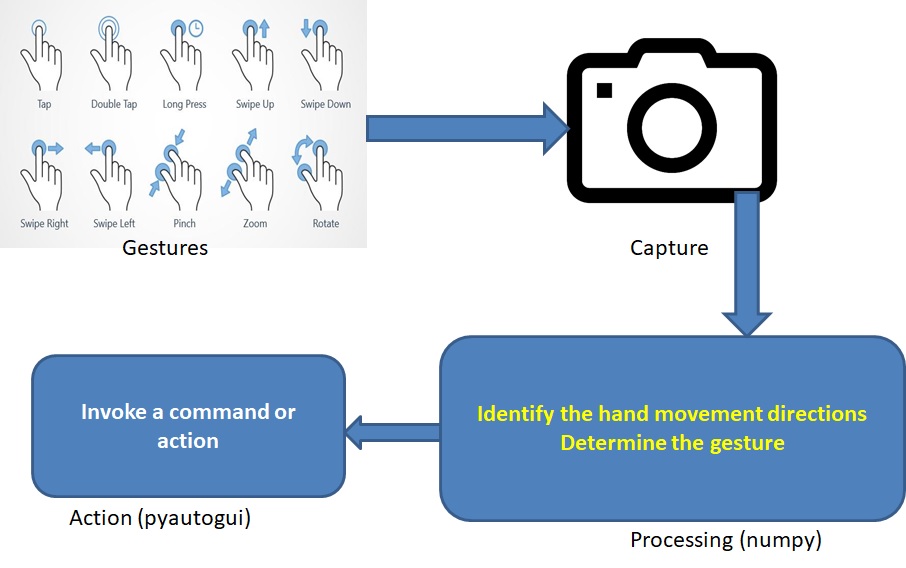

Architecture Diagram:

This architecture diagram depicts the input from the user via gestures and camera captures it , processing it, then it can trigger the specified action .

Steps:

- Install required packages

- Identify the color region by segmentation and thresholding and then mask it

- Track and trace the color to identify the gesture

- Mark an action when the expected gesture is made.

Step 1 Installation:

For capturing and processing an image, we shall use openCV and numpy

For the gesture identification, we shall use the package called imutils .

pip install imutils

IMUTILS : is a set of convenience functions to make basic image processing operations such as translation, rotation, resizing, skeletonization, and displaying Matplotlib images easier with OpenCV and Python. Thanks to Adrian Rosebrock who built it and opensourced it.

Install pyautogui : This is another package used here. This pyautogui package helps to run the commands from the python command prompt.

pip install pyautogui

To verify, run the below commands

import pyautogui

pyautogui.press('f1')

Step2 :

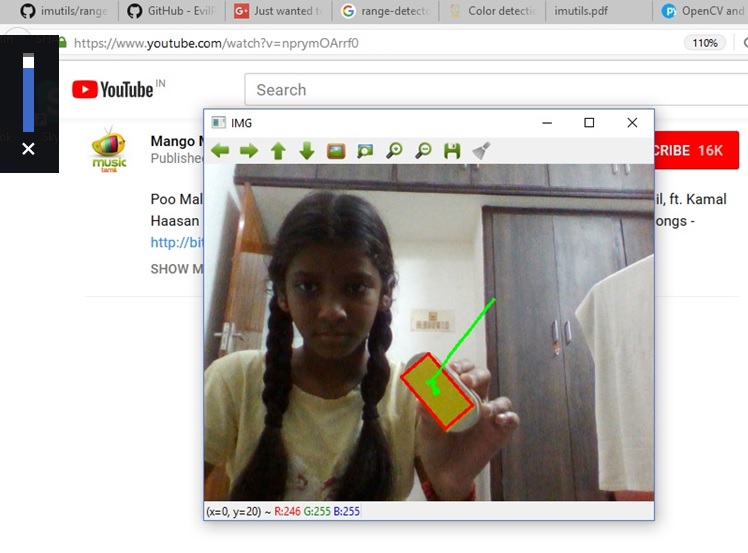

Masking yellow color

run the range-detector tool from imutils

Take one frame at a time and convert it from RGB colour space to HSV colour space for better yellow colour segmentation.

Use a mask for yellow colour.

Blurring and thresholding the mask.

If a yellow colour is found and it crosses a reasonable area threshold,

( This command opens three windows, look thresh window and adjust the trackbars in such a way that only yellow color is shown)

Once masking is done, one can run the gesture-action.py

Step 3:

run the gesture action python program, now the yellow color is identified, make the movements in a way that the path is seen in different color. Basically idea is to identify the direction of the movement and find the action from the direction movement

Step 4:

Assign actions to the identified movements. Here for the mute/unmute , I put in these commands

if processed_gesture==("SW",):

pyautogui.press('volumemute')

Next steps:

We can make multiple actions from the different gestures. By combining power openCV and gesture recognition, we can make multiple utilities.

Possible usecases:

In retail store, one can use the kiosks to order an item

In an airport, one can use the kiosk to find his flight timing

References:

https://github.com/EvilPort2/SimpleGestureRecognition

https://github.com/jrosebr1/imutils/

http://pyautogui.readthedocs.io/en/latest/keyboard.html

AttributeError: ‘NoneType’ object has no attribute ‘sort’

This is what I get after i run the codes.