DynamoDB: Batch Write Vs Transactional Write

If you have worked with dynamo db, you might be aware that dynamo db offers to features for grouping operations on DB, transactions and batching. In this post I will try to point out the difference between these two operations.

On the surface both transactions and batch operations looks same and offers a way to perform multiple operations on DB in one go, but they are very different in how they operate.

Transactions

Transactions in dynamo db are closely related two the transactions we see in RDBMs world. We can group up to 25 operations (or max of 4MB in a read) in a transaction. Transaction API is available for writing and reading data. They provide all or nothing semantics. Means every operation in transaction has to success for the changes to be done on the DB. if any one of the operation fails, transaction will get canceled (failed transactions will rollback any changes they did)

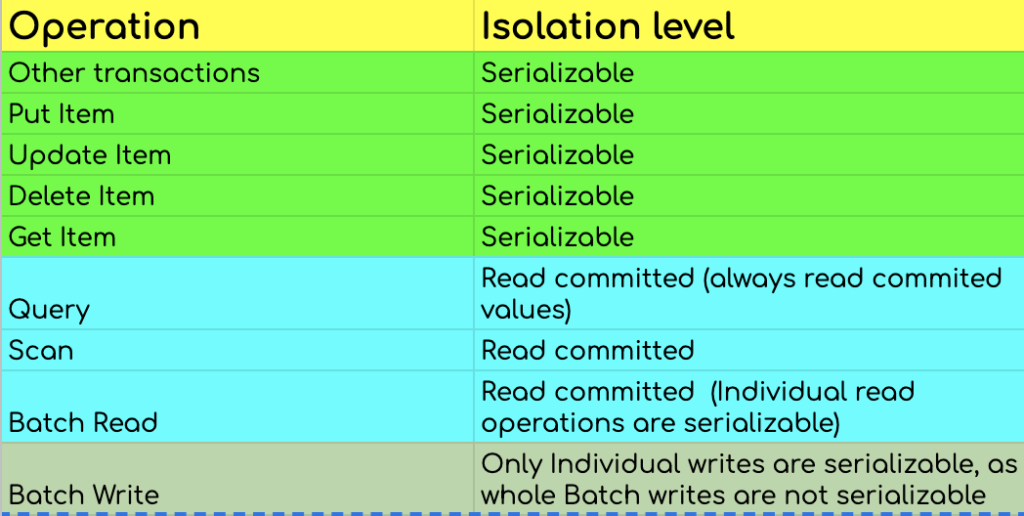

Transactions are ACID complaint, they provide serialised isolation with other transactions and single write operations. Following table summaries isolation levels of a transaction with respect to other operations.

| Operation | Isolation level |

| Other transactions | Serializable |

| Put Item | Serializable |

| Update Item | Serializable |

| Delete Item | Serializable |

| Get Item | Serializable |

| Query | Read committed (always read commited values) |

| Scan | Read committed |

| Batch Read | Read committed (Individual read operations are serializable) |

| Batch Write | Only Individual writes are serializable, as whole Batch writes are not serializable |

Transaction may get canceled if there is another on going transaction on one or more items of the transaction. We can’t have more than one operation on an item in a single transaction. It is advised to keep number of operations in transaction to absolute minimum to increase the chance of success.

Transactions are supported only with in a region in case of global tables and updates to the indexes and streams are done asynchronously.

Transactions are good choice when you need to update two or more tables atomically and rollback all the operations if any one fails.

Batch Operations

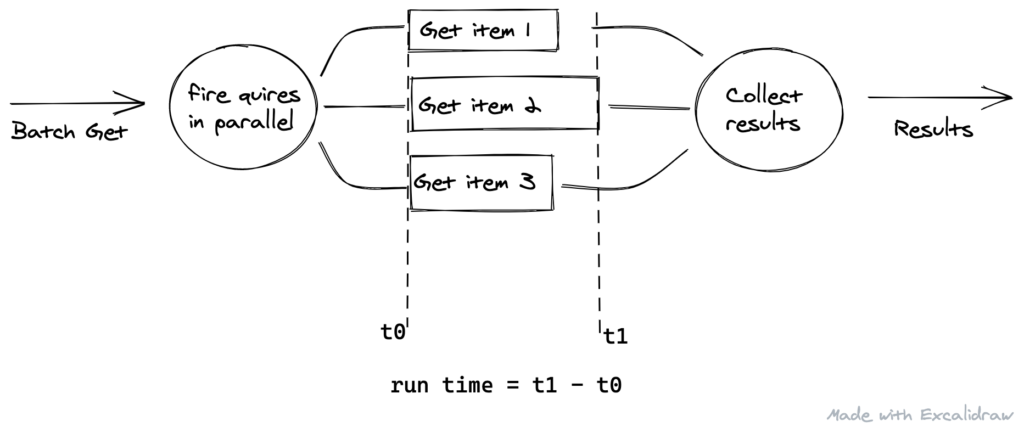

Batch operations are primarily a performance improvement technique. They save you network round trip required for individual operations. Similar to transactions, dynamo db has API for Batch get and Batch write. Dynamo will run all the operations in parallel. If there are 10 individual queries in a batch, dynamo will internally fire all the quires at the same time and will send back the results once all the quires are executed.

Batch get supports up to 100 read operations (max of 16MB data) and Batch write supports up to 25 write operations (max of 16MB data, can include put and delete operations but not update operations). It is possible for some operations to succeed and some to fail in a batch request (very different from transaction). Batch operation will fails only if all the operations in batch fail.

Batch operations doesn’t support conditions on individual items

Could this work on a raspberry pi?

Any advice?

You mean, you want to run program on Raspberry Pi that connects to AWS DynamoDB ? Yeah it will work

BatchWrite also doesn’t have support for update, you can only do add or delete operation in a batch