Tomcat threading model

Tomcat is a popular open source servlet container in the Java ecosystem. Tomcat is the default container for spring boot web applications (spring boot also supports other containers). In this post I will describe the tomcat threading model.

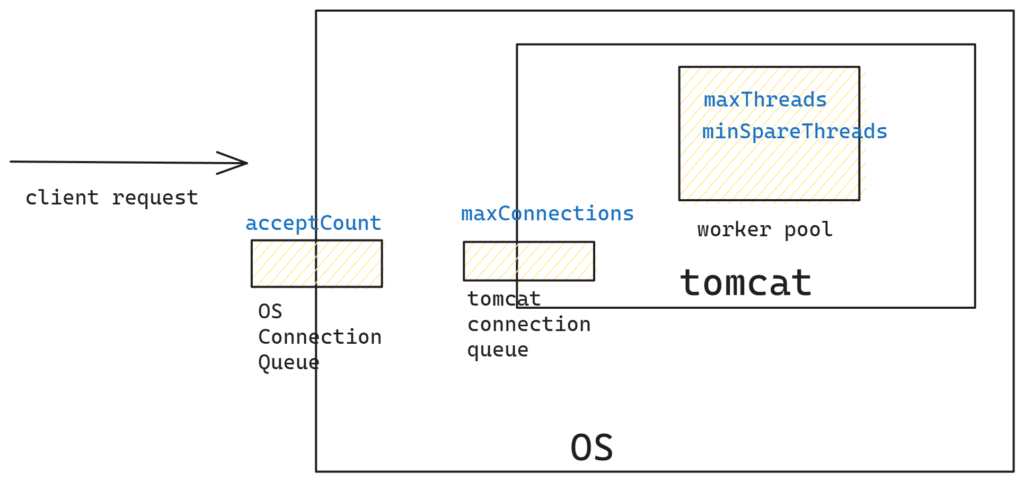

Tomcat follows the thread per request model, which means tomcat will assign a thread to each incoming request. Tomcot will maintain a thread pool, a free thread will be picked from the thread and assigned to the request. If there are no free threads available in the pool, tomcat will create a new thread if the thread pool size is below the maximum allowed. If the pool size has already reached maximum size, then the request will be queued.

What is the optimal thread pool size

Tomcat default values for max pool size 200, The optimal pool size depends on the application characteristics. One can profile the application with various pool sizes and pick the one which gives best performance.

If the application is CPU intensive, then the number of concurrent requests that can be served will be limited by the number of CPU cores available in the system. Increasing the thread count for CPU intensive applications will not result in increased throughput, the system will spend most of the time in context switching than doing the actual work.

If the application is mostly doing calls to DB and serving the results to clients, then the max pool size can be much more than the number of CPU cores of the system. For spingboot deployments, following properties can be used to control the worker pool size.

For versions older than 2.3.x

server.tomcat.min-spare-threads=10

server.tomcat.max-threads=200From 2.3.x, they became

server.tomcat.threads.min-spare=10

server.tomcat.threads.max=200If the applications have endpoints with different characteristics, it might be a good idea to group these endpoints based on characteristics and have different deployments. For example if the application has analytic end points that take 1 second to respond, clubbing them together with endpoints that have 10ms response time may result in queue building and idle CPU ( this happens because the analytics endpoint will hold the thread until the response comes back from the DB). Large queue build up will cause spikes in latencies for clients and may also cause timeouts. The queue size can be controlled with max-connections and accept-count parameters. Tomcat will keep accepting the new connections until max-connections limit is reached, once this limit is reached connections will not be accepted by tomcat, hence they will be queued at OS level. OS will queue the connection until accept-count is reached, then connections will be refused.

server.tomcat.max-connections=8192

server.tomcat.accept-count=100If requests takes on an average T milliseconds (when the thread pool is at its max size), then RPM that can handled by the system can be computed by the following formula

RPM = (60000/ (Tavg)) * thread_pool_sizeIf each request takes 100ms, and we have 10 threads, then we can serve up to 6K RPM. If more requests come in then tomcat will start queuing the requests.