Let us learn TensorflowLite

What is tensorflow lite? Tensorflow lite is a framework for deploying ML on mobile devices and embedded devices and it is built on Tensorflow’s cross platform framework. TFlite supports Swift, python and javascript.

Before going into Tflite, let us know why we need to do edge computing when we have powerful cloud computing is available

— Low latency, poor connection and privacy demands are the three driving forces that makes Edge ML a must in the future.

Thus tflite is important to know.

As a next step, let us understand what power tflite can bring to embedded devices and mobile devices.

Because it is real time inference on edge, lot of use cases can be built.

For example, one can start learning dance and practice it,,, along with trainer. Tflite will enable to show the real time match in the performance of dance.

You can check out my post on using tflite with micro controller here

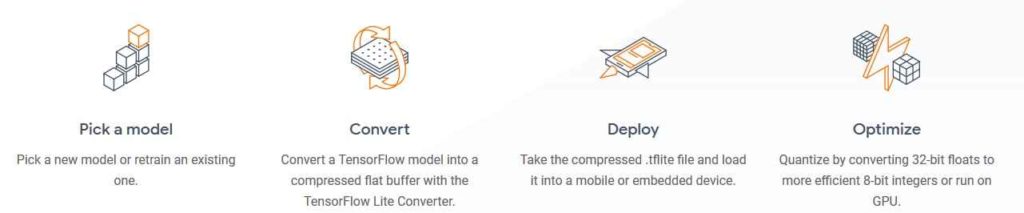

The main steps involved in using the tflite for mobile (or embedded device)

- Collect data and generate model (retrain existing model with the current use case data)

- This model is big, so convert this model into tflite model (file extension tflite)

- Deploy and collect the inference

- Depending on the use case, optimize the model further

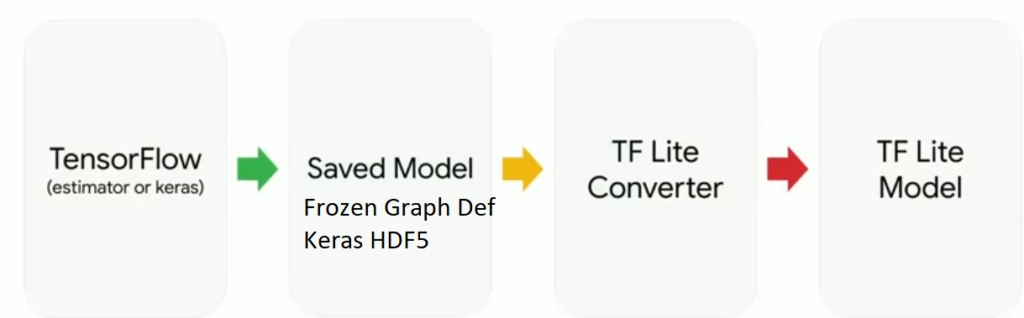

Converting model is easier step. Currently 3 model types are supported, saved model, keras h5 , forzen graph definition

Python Code bit for converting the model

import tensorflow as tf

converter = tf.lite.TFLiteConverter.from_keras_model_file("keras_model.h5")

tflite_model = converter.convert()

open("converted_model.tflite", "wb").write(tflite_model)

code bit for getting inference

interpreter = tf.lite.Interpreter(model_path="converted_model.tflite") interpreter.allocate_tensors() # Get input and output tensors. input_details = interpreter.get_input_details() output_details = interpreter.get_output_details() # Test model on random input data. input_shape = input_details[0]['shape'] input_data = np.array(np.random.random_sample(input_shape), dtype=np.float32) #print(input_data) interpreter.invoke() # The function `get_tensor()` returns a copy of the tensor data. # Use `tensor()` in order to get a pointer to the tensor. output_data = interpreter.get_tensor(output_details[0]['index'])

Tensorflow lite has started work on five fields

1) Object detection

2) image classification

3) Pose estimation

4) Gesture recognition

5) Speech recognition

or all the cases, sample application and android( &iOS) code is given

Some of the highlights of TensorFlow Lite are as follows:

- It supports a set of core operators which have been tuned for mobile platforms. TensorFlow also supports custom operations in models.

- A new file format based on FlatBuffers.

- On device interpreter which uses selective loading technique.

- When all supported operators are linked TensorFlow Lite is smaller than 300kb.

- Java and C++ API support.

Some of the highlights from embedded side

Support for Apollo v3 Evb (aka sparkfun edge)

Support for STM32F746G Discovery Board

Support for Ardunio MKrzero I deployed the android apps on to my phone.

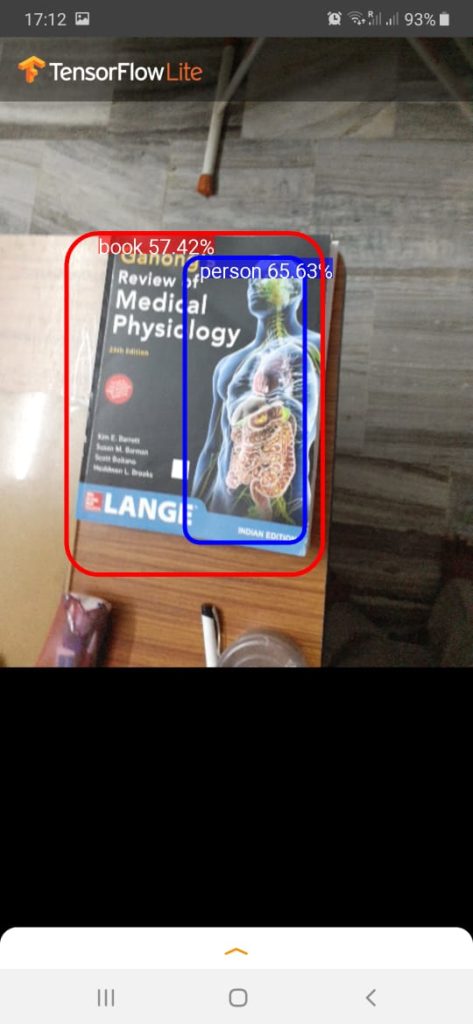

Object detection

Code not only identified book , but also a person on the book cover

References

Guide : https://www.tensorflow.org/lite/guide

Examples: https://www.tensorflow.org/lite/examples

tensorflow lite for poets: https://codelabs.developers.google.com/codelabs/t…

https://petewarden.com/2016/09/27/tensorflow-for-m…