NodeJS: Exporting large MongoDB collections

Recently I had implemented a REST endpoint, using this endpoint users can initiate a background report generation task. Users can download the report once it is generated. The base collection for the report is very large. So we can’t simply load all the collections to memory and process them, due to large memory footprint.

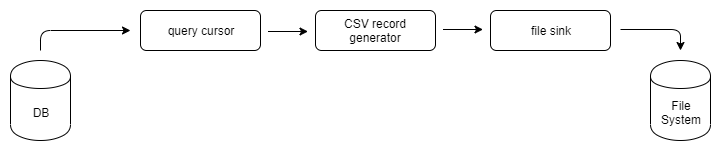

In this post we will see how to export/generate reports on a large mongo db collection. Specifically I will create a pipeline that generates a CSV report from a mongo db collection

My solution involves using mongo db cursor . Mongo db cursor allows us to read the documents one by one instead of loading them all at once into memory. My entire report generation pipeline is based on streams.

Pipeline consists of three components

- cursor on the collection

- CSV record generator

- file sync to write the report to the file system

Query Cursor

The cursor method of Query interface returns QueryCursor. QueryCursor implements Stream3 interface. we can directly pass the returned value to pipeline

model.find({some query}).cursor()

CSV Record Generator

to generate CSV records from JS objects, We can use csv-stringify. It has great support for streaming API.

let csvStream = stringify({

header: true,

columns: {

userId: 'USER_ID',

email:'EMAIL',

gender: 'GENDER',

}

})

File Sink

Here we are using saving the report to local csv file, this can be any writable stream. We will use nodejs file system createWriteStream method to create a writable stream

fs.createWriteStream(path,{autoClose:true})

Example Code snippet

For example lets assume we have a test_db with user collection. We can run generate a report with all active using following snippet

Hey,

Thanks, This lets me export very large collections, without going out off ram.

Hi,

I have a collection with millions of records, i would like to export it to a file with one json record per a line. How can i do that using your script

Thanks

Hi,

This script makes it easy to export very large mongodb collections using nodejs. Did you ran any benchmarks ?